Interactive Job

It is helpful to run your work and see the response of the

commands right away to check if there is any error in your work flow. To

use the interactive mode with resources more than the limit imposed on

the dev nodes, HPCC users can submit an interactive job using the interact

powertool, or the salloc/srun commands, with options of resource requests.

interact powertool

The interact powertool provides sensible defaults to launch interactive jobs

on the HPCC. It is loaded as part of the default modules. Alternatively you can

access it with module load powertools. The default resource request when you

run interact is 1 task on 1 core on 1 node for 1 hour. Your job will be queued.

Do not close your terminal. Once the job has queued, you will be transported to a

command prompt on the compute node assigned to your job. You can close your

terminal and reconnect to the interactive session following the information at

Connections to compute nodes.

Specifying other resources

To request resources beyond the defaults, you can use the following options:

| Option | Alternate | Description |

|---|---|---|

-t <Time> |

--time |

Set a limit on the total run time. |

-gpu |

--gpu |

Allocate 1 gpu and 16 CPUs |

-N <Nodes> |

--nodes |

Number of nodes |

-c <ncores> |

Number of cores | |

-n <ntasks> |

Number of tasks (spread over all Nodes) | |

--ntasks-per-node=<ntasks> |

Number of tasks, 1 per core per node. | |

--mem=<MB> |

Real memory required per node in MegaBytes |

You can view the other options that interact accepts using the command

interact -h.

salloc command

For salloc, the command line

salloc -N 1 -c 2 --time=1:00:00

will allocate a job with resources of 1 node, 2 cores and walltime 1 hour. The execution will first wait until the job controller can provide the resources.

[username@dev-intel18 WorkDir]$ salloc -N 1 -c 2 --time=1:00:00

salloc: Required node not available (down, drained or reserved)

salloc: job 7625 queued and waiting for resources

Once that happens, the terminal will be transported to a command prompt on a compute node assigned to the job.

[username@dev-intel18 WorkDir]$ salloc -N 1 -c 2 --time=1:00:00

salloc: Required node not available (down, drained or reserved)

salloc: job 7625 queued and waiting for resources

salloc: job 7625 has been allocated resources

[username@test-skl-000 WorkDir]$

where "test-skl-000" after the symbol @ is the name of the assigned compute node.

salloc and GPUs

GPUs requested for an interactive job can now be used without submitting an additional srun. See our pages on our GPU resources and requesting GPUs for more information.

[username@dev-intel18 ~]$ salloc --gpus=k80:1 --time=1:00:00

salloc: Pending job allocation 28241766

salloc: job 28241766 queued and waiting for resources

salloc: job 28241766 has been allocated resources

salloc: Granted job allocation 28241766

salloc: Waiting for resource configuration

salloc: Nodes lac-195 are ready for job

[username@lac-195 ~]$ nvidia-smi

Thu Jan 4 14:34:03 2024

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.82.01 Driver Version: 470.82.01 CUDA Version: 11.4 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla K80 On | 00000000:0B:00.0 Off | 0 |

| N/A 40C P8 30W / 149W | 0MiB / 11441MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

srun command

A similar way can also be used with srun command:

[username@dev-intel18 WorkDir]$ srun -N 4 --ntasks-per-node=2 -t 00:60:00 --mem-per-cpu=1000M --pty /bin/bash

srun: Required node not available (down, drained or reserved)

srun: job 7636 queued and waiting for resources

srun: Granted job allocation 7636

[username@test-skl-000 WorkDir]$

As we can see, the specification "--pty /bin/bash" is required for srun command to request an interactive mode. Any command executed in this kind of interactive jobs will be launched parallelly with the number of task requested. srun can also be used in a command line without the specification "--pty /bin/bash". You may refer to the srun web site for more details.

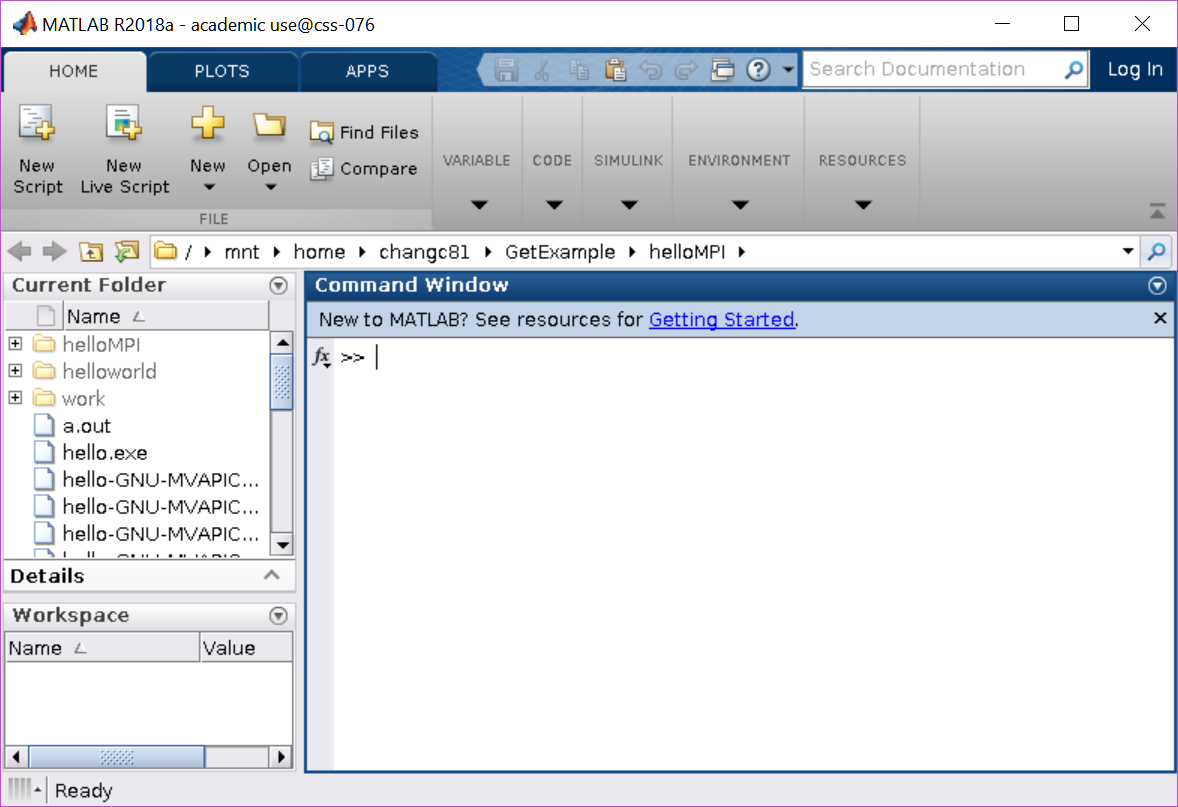

Job with graphical application

To schedule an interactive job able to use graphical user

interface (GUI) software, the specification --x11 for X11 forwarding

needs to be specified with the command (salloc or srun). You must use

the -X parameter with ssh to allow X11 forwarding when connecting to both gateway

and development nodes prior to running the salloc command. If you are

using Mac Terminal, you must have Xquartz installed.

If you are on Windows and using Moba Xterm to log in, these instructions

will work with the -X parameter. Putty does not support X11

and so this will not work with putty.

The other option is to first log into our web-based remote desktop, and run the terminal there. See Web Site Access to HPCC for GUI software.

[username@gateway-03 ~]$ ssh -X dev-intel18

[username@dev-intel18 ~]$ cd WorkdDir # this is optional, but you may want to select your work directory, for example

[username@dev-intel18 WorkDir]$ salloc --ntasks=1 --cpus-per-task 2 --time 00:30:00 --x11

salloc: Granted job allocation 7708

salloc: Waiting for resource configuration

salloc: Nodes css-076 are ready for job

[username@css-076 WorkDir]$ module load MATLAB

[username@css-076 WorkDir]$ matlab

MATLAB is selecting SOFTWARE OPENGL rendering.

Opening log file: /mnt/home/username/java.log.7159