Compilers and Libraries

The HPCC organizes most compilers and libraries into "toolchains". A toolchain is a set of tools bundled together that can be used to build software. This may be as minimal as a single compiler, or as expansive as a compiler with MPI, linear algebra, CUDA, and other helpful libraries.

These toolchains are inherited from the program ICER uses to build much of its software, EasyBuild. For more information, please see the EasyBuild documentation pages for common toolchains and all toolchains.

Common toolchains

ICER primarily supports two toolchains on the HPCC: foss and intel. These

are each numbered by year (with an a or b suffix for midyear and end of

year releases respectively) and contain compilers and libraries that are

compatible with each other.

foss toolchain

foss components

The foss toolchain is currently derived from the following components (and is

equivalent to doing a module load on each one individually):

GCC: Composed ofOpenMPI: MPI implementation for multi-process programsFlexiBLAS: BLAS and LAPACK API (usingOpenBLASand referenceLAPACKas backends) for linear algebra routinesFFTW: Library for Fast Fourier TransformsScaLAPACK: Parallel/distributed linear algebra routines

foss versions

To see the versions of this toolchain available on the HPCC use

1 | |

and to see the versions of each component, use

1 | |

foss usage examples

| Using OpenMP and shared libraries | |

|---|---|

1 2 3 4 5 6 | |

| Using OpenMP and shared libraries | |

|---|---|

1 2 3 4 5 6 | |

| Using MPI | |

|---|---|

1 2 3 | |

| Using MPI | |

|---|---|

1 2 3 | |

foss sub-toolchains

If the entire foss toolchain has too many dependencies for your needs,

consider one of the sub-toolchains:

GCC: composed ofGCCCorebinutils

gompi: composed ofGCCOpenMPI

Or feel free to load the individual modules that you need by themselves.

intel toolchain

intel components

The intel toolchain is currently derived from the following components (and

is equivalent to doing a module load on each one individually):

intel-compilers: Intel's set of (classic and oneAPI) C/C++ and Fortran compilers in addition to:impi: Intel's MPI implementation for multi-process programsimkl: Intel's BLAS and LAPACK implementations with FFT and other math libraries

intel versions

To see the versions of this toolchain currently available on the HPCC use

1 | |

and to see the versions of each component, use

1 | |

intel usage examples

| Using OpenMP and shared libraries | |

|---|---|

1 2 3 4 5 | |

| Using OpenMP and shared libraries | |

|---|---|

1 2 3 4 5 | |

| Using MPI | |

|---|---|

1 2 3 | |

| Using MPI | |

|---|---|

1 2 3 | |

intel sub-toolchains

If the entire intel toolchain has too many dependencies for your needs,

consider one of the sub-toolchains:

intel-compilers: composed ofGCCCorebinutils- The Intel compilers themselves

iimpi: composed ofintel-compilersimpi

Or feel free to load the individual modules that you need by themselves.

Mix and match

Certain components can be combined across toolchains. A notable example is the Intel Math Kernel Library (MKL), imkl, that can be loaded with any other compiler.

C example mixing GCC and MKL

1 2 3 4 5 | |

For more information on linking against MKL, see Intel's documentation.

Alternative compilers and toolchains

In addition to the foss and intel toolchains, select versions of other

compilers are available (with limited support) including:

AOCC

AMD optimized compilers for C and Fortran.

These use LLVM as a backend and therefore have the same command-line syntax as

Clang.

See also the documentation for Optimizing for AMD CPUs which gives information on installing AOCL, the AMD Optimizing CPU Libraries for AMD optimizations of math libraries, BLAS, FFTW, and others.

| Using OpenMP | |

|---|---|

1 2 3 4 | |

| Using OpenMP | |

|---|---|

1 2 3 4 | |

| Using AOCL libraries | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 | |

| Using AOCL libraries | |

|---|---|

1 2 3 4 5 6 7 8 9 10 | |

Clang

LLVM-based C compilers. For math libraries like

FlexiBLAS and FFTW, you will also need to load a compatible toolchain.

| Using OpenMP | |

|---|---|

1 2 3 4 5 | |

| Using OpenMP | |

|---|---|

1 2 3 4 5 6 | |

NVHPC (formerly PGI)

The NVIDIA HPC Software Development Kit. This includes C and Fortran

CUDA-compatible compilers, an OpenMPI implementation, and GPU accelerated math

libraries. It also includes implementations of BLAS and

LAPACK,

but for other CPU math libraries like FFTW, you will

need to load a compatible toolchain.

Note that these compilers are most effective for building software on GPUs.

| Using OpenMP and included shared libraries | |

|---|---|

1 2 3 4 5 6 | |

| Using OpenMP and included shared libraries | |

|---|---|

1 2 3 4 5 6 | |

| Using outside CPU libraries | |

|---|---|

1 2 3 4 5 6 | |

| Using outside CPU libraries | |

|---|---|

1 2 3 4 5 6 | |

| Using MPI | |

|---|---|

1 2 3 | |

| Using MPI | |

|---|---|

1 2 3 | |

Alternative MPI implementations

Though we recommend OpenMPI or Intel MPI, older installations of MPICH and MVAPICH2 are available. These are generally only compatible with a single compiler module as they were built on a one-off basis.

You can see the available versions and how to load them with

1 | |

or

1 | |

If OpenMPI, Intel MPI, or the available versions of MPICH and MVAPICH2 will

not work for your needs, please contact us to

discuss alternatives.

Basic Mathematical Library Benchmark

Possibly outdated

This benchmark was run when the AMD EPYC processors were installed (approximately 2020). The results may be outdated due to compiler improvements or hardware changes.

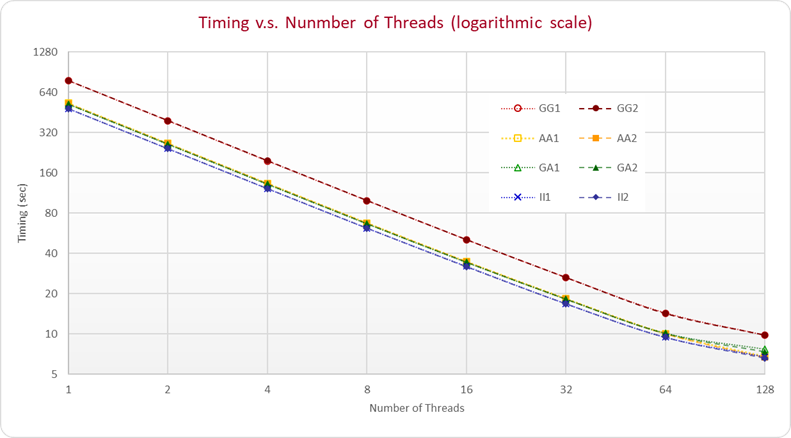

This section shows the results of a basic test of the mathematical

libraries with different compilers on the AMD EPYC Processors (i.e., those in

the amd20 cluster). The test runs many calculations of sine, cosine

and logarithm functions in parallel. Each of the calculations is independent

from the others and finally they get summed up. The test is executed by a C

program written with OpenMP multi-threading and compiled with different

compilers and libraries. Three letters (A, G and I) followed by a digit (1 or

2) are used to specify different tests:

| Letters | First | Second | Digit |

|---|---|---|---|

| A | AMD Compiler | AMD basic mathematical Library | 1: all threads running in one socket |

| G | GNU Compiler | GNU basic mathematical Library (-lm) |

2: threads evenly spread to two different sockets |

| I | Intel Compiler | Intel basic mathematical Library (included in compiler) |

where the letter in the first and second position represents which compiler and basic mathematical library is in use respectively. The performance results are presented in the following figure:

where all timing values were derived by the average of running ten times. As you can see in the figure, the performance of the parallel scaling is almost linear for all compilers and the scaling efficiency is strong (about 61% for AA1 and AA2). From the comparison of the timing results, Intel compiler with its library shows the best performance. However, GCC and AMD compilers with AMD basic mathematical library also perform well. In the results of 128 threads, the elapsed time of AMD compiler with AMD library are very closed to the time of Intel's. In the tests of spreading threads, we also find out all threads running on one socket has no difference from spreading them on two different sockets.

The same C program was also compiled and run on an intel18 and an

intel16 node. The timing of amd20 node with 128 threads is about 3 times

faster than the performance of intel18 (with 40 threads) and 4.5 times faster

than the performance of intel16 (with 28 threads). The decrease in timing is

well prorated with the increase on thread number.